View Dependent Bump Mapping

Living in California has its benefits. For example, you can spend two hours driving to a beach, and look at hundreds of other people who also had the same idea. Doing this is a great source of inspiration.

People walking in the sand cause the sand to become very pitted in a haphazard sort of fashion. It's an almost ideal case of surface where modern bump mapping (implemented using normal mapps) can make a huge difference. The overall shape of the beach is very sweeping; all the detail comes from the light interacting with the granularly bumpy texture.

However, looking this way and that, trying to scope for hot babes in swimming suits (woefully not present this fine November day), I realize that a regular normal map suffers from a very real problem. The sun is sort of low in the sky, it's after 3 pm, and when looking towards the sea (where the sun is), the sand is very dark. Looking towards land, the sand is still bright. The reason for this is that the bumps introduce not only various diffuse lighting based on surface angle to the light, but also because the bumps are self-occluding depending on the angle of the viewer. Traditional normal mapping techniques don't take this into account.

What to do? Well, it seems to me that this problem is the worst when you glance at a surface using a very shallow angle, and the problem is pretty much nonexistent when you look straight at the surface (in the (negative) direction of the "flat" surface normal). Thus, the amount of the problem appears proportional to:

For people with a tangent space set-up the "flatnormal" is the normal vector in their tangent space matrix (as opposed to the binormal and the tangent). That's an intersting observation, but still doesn't get us quite where we want -- after all, view dot flatnormal is the same no matter whether we're looking towards the light, or away from the light.

At this point, I realize that we can probably fake the self occlusion by perturbing all normals coming out of our bump map. (I keep saying bump map, and keep meaning normal map, or alternatively, normal-as-derived-from- heightfield-bump-map-using-partial-derivatives-in-fragment-program). If we slant the normals toward the light when we look away from the light, and away from the light when we look toward the light, we'll get the effect of all-bright sand when looking away, and all-dark sand when looking towards. The term can probably be approximated with:

Where LIGHTPROJ is the light projected to the plane defined by the flatnormal:

view is the direction from fragment to viewer (unit length). light is the direction from the fragment to the light (unit length). If you fake this using global or infinite vectors, it probably will just lead to lighting that changes if you rotate or tilt your head. We might want to scale the contribution such that we don't perturb for view angles less than some angle away from the normal, such as 45 degrees; that can be taken care of in calculating the SCALE.

Add this perturbation term to your normal, as gotten out of the bump map, and re-normalize, and it should all be good to go.

So is this sufficient? I don't know. It has the unfortunate side effect of generally darkening everything when you're looking towards the light, and generally brightening everything when you're looking away from the light, for surfaces that are at glancing angles to the viewer. This is not entirely a bad thing, as many rough surfaces have this characteristic inherently. To give artists a little more control, perhaps the amount of view dependent normal map diffuse lighting peturbation should be scaled by the negative of the amount of glossiness of the surface. Thus, a fully non-glossy (very rough) surface would get the full effect, whereas a fully glossy (very smooth) surface would get no effect. This might actually be sufficient compensation to be very convincing.

Another few weighting options to consider are to weight the peturbation by (1-normal.y), assuming your basic normal points up in the Y direction, or to high-pass filter the normal map, and use the output value of this high-pass filtering (a measure of local variation amount) to scale the peturbation.

One of these days, I'll slam some textures in a test program and see what comes out of this thought. I'll probably post images on this page at that point. In the meanwhile, if you try this out on your own, or if you know of someone who has written up this technique before me, please drop me a line:

normal-map at mindcontrol dot org

Some additional comments

Trying to do this all per-pixel in a fragment program quickly becomes very expensive; the full program is like 30 instructions (view dependent adjusted normal map + diffuse color + ambient). Interpolating the displacement vector in a texture coordinate set is certainly an option, but as there's three per- fragment dot products in there, that might not look all that great. I'll have to try it.

Illustrations

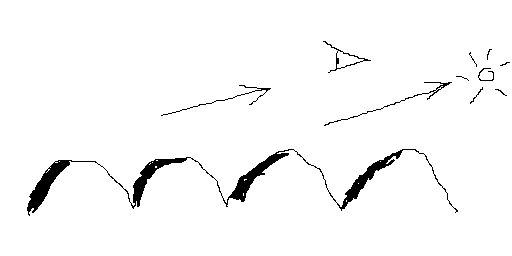

View towards the light: the eye sees mostly shadowed parts of the surface.

View away from the light: the eye sees mostly lit parts of the surface.